前面有分析过Camera的实现,现在来看看MediaRecorder的实现,这里我不会太去关注它的分层结构,我更关注它的逻辑!

APP层 /path/to/aosp/frameworks/base/media/java/android/media/MediaRecorder.java

JNI层 /path/to/aosp/frameworks/base/media/jni/android_media_MediaRecorder.cpp

调用NATIVE层的MediaRecorder(这里是BnMediaRecorderClient)

header /path/to/aosp/frameworks/av/include/media/mediarecorder.h

implementation /path/to/aosp/frameworks/av/media/libmedia/mediarecorder.cpp

MediaRecorder::MediaRecorder() : mSurfaceMediaSource(NULL)

{

ALOGV("constructor");

const sp<IMediaPlayerService>& service(getMediaPlayerService());

if (service != NULL) {

mMediaRecorder = service->createMediaRecorder(getpid());

}

if (mMediaRecorder != NULL) {

mCurrentState = MEDIA_RECORDER_IDLE;

}

doCleanUp();

}

getMediaPlayerService()这个方法位于/path/to/aosp/frameworks/av/include/media/IMediaDeathNotifier.h

获取到MediaPlayerService(这个是BpMediaPlayerService)之后

调用IMediaPlayerService当中的

sp<IMediaRecorder> MediaPlayerService::createMediaRecorder(pid_t pid)

{

sp<MediaRecorderClient> recorder = new MediaRecorderClient(this, pid);

wp<MediaRecorderClient> w = recorder;

Mutex::Autolock lock(mLock);

mMediaRecorderClients.add(w);

ALOGV("Create new media recorder client from pid %d", pid);

return recorder;

}

创建MediaRecorderClient(这里是BnMediaRecorder)

但是通过binder拿到的是BpMediaRecorder

因为有如下的interface_cast过程

virtual sp<IMediaRecorder> createMediaRecorder(pid_t pid)

{

Parcel data, reply;

data.writeInterfaceToken(IMediaPlayerService::getInterfaceDescriptor());

data.writeInt32(pid);

remote()->transact(CREATE_MEDIA_RECORDER, data, &reply);

return interface_cast<IMediaRecorder>(reply.readStrongBinder());

}

而MediaRecorderClient当中又会创建StagefrightRecorder(MediaRecorderBase),它位于

/path/to/aosp/frameworks/av/media/libmediaplayerservice/StagefrightRecorder.cpp

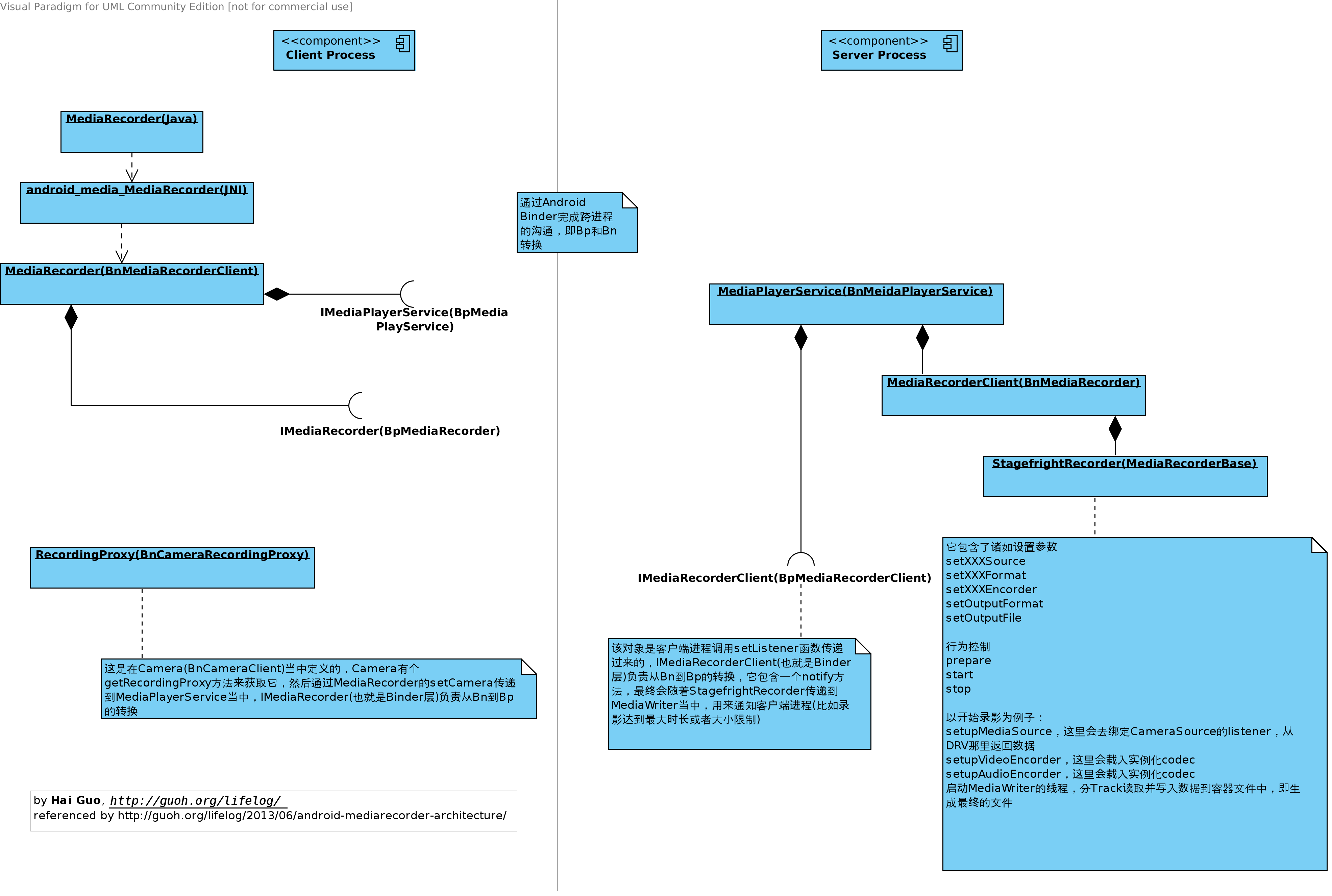

目前我们可以认为在APP/JNI/NATIVE这边是在一个进程当中,在MediaPlayerService当中的MediaRecorderClient/StagefrightRecorder是在另外一个进程当中,他们之间通过binder通信,而且Bp和Bn我们也都有拿到,后面我们将不再仔细区分Bp和Bn。

客户端这边

BnMediaRecorderClient

BpMediaRecorder

BpMediaPlayerService服务端这边

BpMediaRecorderClient(如果需要通知客户端的话,它可以获得这个Bp)

BnMediaRecorder

BnMediaPlayerService

我们以开始录影为例子,比如start()

在这里就兵分两路,一个CameraSource,一个MPEG4Writer(sp

这两个class都位于/path/to/aosp/frameworks/av/media/libstagefright/当中

status_t StagefrightRecorder::startMPEG4Recording() {

int32_t totalBitRate;

status_t err = setupMPEG4Recording(

mOutputFd, mVideoWidth, mVideoHeight,

mVideoBitRate, &totalBitRate, &mWriter);

if (err != OK) {

return err;

}

int64_t startTimeUs = systemTime() / 1000;

sp<MetaData> meta = new MetaData;

setupMPEG4MetaData(startTimeUs, totalBitRate, &meta);

err = mWriter->start(meta.get());

if (err != OK) {

return err;

}

return OK;

}

status_t StagefrightRecorder::setupMPEG4Recording(

int outputFd,

int32_t videoWidth, int32_t videoHeight,

int32_t videoBitRate,

int32_t *totalBitRate,

sp<MediaWriter> *mediaWriter) {

mediaWriter->clear();

*totalBitRate = 0;

status_t err = OK;

sp<MediaWriter> writer = new MPEG4Writer(outputFd);

if (mVideoSource < VIDEO_SOURCE_LIST_END) {

sp<MediaSource> mediaSource;

err = setupMediaSource(&mediaSource); // very important

if (err != OK) {

return err;

}

sp<MediaSource> encoder;

err = setupVideoEncoder(mediaSource, videoBitRate, &encoder); // very important

if (err != OK) {

return err;

}

writer->addSource(encoder);

*totalBitRate += videoBitRate;

}

// Audio source is added at the end if it exists.

// This help make sure that the "recoding" sound is suppressed for

// camcorder applications in the recorded files.

if (!mCaptureTimeLapse && (mAudioSource != AUDIO_SOURCE_CNT)) {

err = setupAudioEncoder(writer); // very important

if (err != OK) return err;

*totalBitRate += mAudioBitRate;

}

...

writer->setListener(mListener);

*mediaWriter = writer;

return OK;

}

// Set up the appropriate MediaSource depending on the chosen option

status_t StagefrightRecorder::setupMediaSource(

sp<MediaSource> *mediaSource) {

if (mVideoSource == VIDEO_SOURCE_DEFAULT

|| mVideoSource == VIDEO_SOURCE_CAMERA) {

sp<CameraSource> cameraSource;

status_t err = setupCameraSource(&cameraSource);

if (err != OK) {

return err;

}

*mediaSource = cameraSource;

} else if (mVideoSource == VIDEO_SOURCE_GRALLOC_BUFFER) {

// If using GRAlloc buffers, setup surfacemediasource.

// Later a handle to that will be passed

// to the client side when queried

status_t err = setupSurfaceMediaSource();

if (err != OK) {

return err;

}

*mediaSource = mSurfaceMediaSource;

} else {

return INVALID_OPERATION;

}

return OK;

}

status_t StagefrightRecorder::setupCameraSource(

sp<CameraSource> *cameraSource) {

status_t err = OK;

if ((err = checkVideoEncoderCapabilities()) != OK) {

return err;

}

Size videoSize;

videoSize.width = mVideoWidth;

videoSize.height = mVideoHeight;

if (mCaptureTimeLapse) {

if (mTimeBetweenTimeLapseFrameCaptureUs < 0) {

ALOGE("Invalid mTimeBetweenTimeLapseFrameCaptureUs value: %lld",

mTimeBetweenTimeLapseFrameCaptureUs);

return BAD_VALUE;

}

mCameraSourceTimeLapse = CameraSourceTimeLapse::CreateFromCamera(

mCamera, mCameraProxy, mCameraId,

videoSize, mFrameRate, mPreviewSurface,

mTimeBetweenTimeLapseFrameCaptureUs);

*cameraSource = mCameraSourceTimeLapse;

} else {

*cameraSource = CameraSource::CreateFromCamera(

mCamera, mCameraProxy, mCameraId, videoSize, mFrameRate,

mPreviewSurface, true /*storeMetaDataInVideoBuffers*/);

}

mCamera.clear();

mCameraProxy.clear();

if (*cameraSource == NULL) {

return UNKNOWN_ERROR;

}

if ((*cameraSource)->initCheck() != OK) {

(*cameraSource).clear();

*cameraSource = NULL;

return NO_INIT;

}

// When frame rate is not set, the actual frame rate will be set to

// the current frame rate being used.

if (mFrameRate == -1) {

int32_t frameRate = 0;

CHECK ((*cameraSource)->getFormat()->findInt32(

kKeyFrameRate, &frameRate));

ALOGI("Frame rate is not explicitly set. Use the current frame "

"rate (%d fps)", frameRate);

mFrameRate = frameRate;

}

CHECK(mFrameRate != -1);

mIsMetaDataStoredInVideoBuffers =

(*cameraSource)->isMetaDataStoredInVideoBuffers();

return OK;

}

status_t StagefrightRecorder::setupVideoEncoder(

sp<MediaSource> cameraSource,

int32_t videoBitRate,

sp<MediaSource> *source) {

source->clear();

sp<MetaData> enc_meta = new MetaData;

enc_meta->setInt32(kKeyBitRate, videoBitRate);

enc_meta->setInt32(kKeyFrameRate, mFrameRate);

switch (mVideoEncoder) {

case VIDEO_ENCODER_H263:

enc_meta->setCString(kKeyMIMEType, MEDIA_MIMETYPE_VIDEO_H263);

break;

case VIDEO_ENCODER_MPEG_4_SP:

enc_meta->setCString(kKeyMIMEType, MEDIA_MIMETYPE_VIDEO_MPEG4);

break;

case VIDEO_ENCODER_H264:

enc_meta->setCString(kKeyMIMEType, MEDIA_MIMETYPE_VIDEO_AVC);

break;

default:

CHECK(!"Should not be here, unsupported video encoding.");

break;

}

sp<MetaData> meta = cameraSource->getFormat();

int32_t width, height, stride, sliceHeight, colorFormat;

CHECK(meta->findInt32(kKeyWidth, &width));

CHECK(meta->findInt32(kKeyHeight, &height));

CHECK(meta->findInt32(kKeyStride, &stride));

CHECK(meta->findInt32(kKeySliceHeight, &sliceHeight));

CHECK(meta->findInt32(kKeyColorFormat, &colorFormat));

enc_meta->setInt32(kKeyWidth, width);

enc_meta->setInt32(kKeyHeight, height);

enc_meta->setInt32(kKeyIFramesInterval, mIFramesIntervalSec);

enc_meta->setInt32(kKeyStride, stride);

enc_meta->setInt32(kKeySliceHeight, sliceHeight);

enc_meta->setInt32(kKeyColorFormat, colorFormat);

if (mVideoTimeScale > 0) {

enc_meta->setInt32(kKeyTimeScale, mVideoTimeScale);

}

if (mVideoEncoderProfile != -1) {

enc_meta->setInt32(kKeyVideoProfile, mVideoEncoderProfile);

}

if (mVideoEncoderLevel != -1) {

enc_meta->setInt32(kKeyVideoLevel, mVideoEncoderLevel);

}

OMXClient client;

CHECK_EQ(client.connect(), (status_t)OK);

uint32_t encoder_flags = 0;

if (mIsMetaDataStoredInVideoBuffers) {

encoder_flags |= OMXCodec::kStoreMetaDataInVideoBuffers;

}

// Do not wait for all the input buffers to become available.

// This give timelapse video recording faster response in

// receiving output from video encoder component.

if (mCaptureTimeLapse) {

encoder_flags |= OMXCodec::kOnlySubmitOneInputBufferAtOneTime;

}

sp<MediaSource> encoder = OMXCodec::Create(

client.interface(), enc_meta,

true /* createEncoder */, cameraSource,

NULL, encoder_flags);

if (encoder == NULL) {

ALOGW("Failed to create the encoder");

// When the encoder fails to be created, we need

// release the camera source due to the camera's lock

// and unlock mechanism.

cameraSource->stop();

return UNKNOWN_ERROR;

}

*source = encoder;

return OK;

}

这里和OMXCodec关联起来

有一个叫media_codecs.xml的配置文件来表明设备支持哪些codec

我们录制MPEG 4的时候还会有声音,所以后面还有个setupAudioEncoder,具体的方法就不展开了,总之就是把声音也作为一个Track加入到MPEG4Writer当中去。

这里插个题外话,Google说把setupAudioEncoder放到后面是为了避免开始录影的那一个提示声音也被录制进去,但是实际发现它这样做还是会有bug,在一些设备上还是会把那声录制进去,这个遇到的都是靠APP自己来播放声音来绕过这个问题的。

另外MPEG4Writer当中有个

start(MetaData*)

启动两个方法

a) startWriterThread

启动一个thread去写

void MPEG4Writer::threadFunc() {

ALOGV("threadFunc");

prctl(PR_SET_NAME, (unsigned long)"MPEG4Writer", 0, 0, 0);

Mutex::Autolock autoLock(mLock);

while (!mDone) {

Chunk chunk;

bool chunkFound = false;

while (!mDone && !(chunkFound = findChunkToWrite(&chunk))) {

mChunkReadyCondition.wait(mLock);

}

// Actual write without holding the lock in order to

// reduce the blocking time for media track threads.

if (chunkFound) {

mLock.unlock();

writeChunkToFile(&chunk);

mLock.lock();

}

}

writeAllChunks();

}

b) startTracks

status_t MPEG4Writer::startTracks(MetaData *params) {

for (List<Track *>::iterator it = mTracks.begin();

it != mTracks.end(); ++it) {

status_t err = (*it)->start(params);

if (err != OK) {

for (List<Track *>::iterator it2 = mTracks.begin();

it2 != it; ++it2) {

(*it2)->stop();

}

return err;

}

}

return OK;

}

然后调用每个Track的start方法

status_t MPEG4Writer::Track::start(MetaData *params) {

...

initTrackingProgressStatus(params);

...

status_t err = mSource->start(meta.get()); // 这里会去执行CameraSource(start),这两个是相互关联的

...

pthread_create(&mThread, &attr, ThreadWrapper, this);

return OK;

}

void *MPEG4Writer::Track::ThreadWrapper(void *me) {

Track *track = static_cast<Track *>(me);

status_t err = track->threadEntry();

return (void *) err;

}

通过status_t MPEG4Writer::Track::threadEntry()

是新启动另外一个thread,它里面会通过一个循环来不断读取CameraSource(read)里面的数据,CameraSource里面的数据当然是从driver返回过来的(可以参见CameraSourceListener,CameraSource用一个叫做mFrameReceived的List专门存放从driver过来的数据,如果收到数据会调用mFrameAvailableCondition.signal,若还没有开始录影,这个时候收到的数据是被丢弃的,当然MediaWriter先启动的是CameraSource的start方法,再启动写Track),然后写到文件当中。

注意:准确来说这里MPEG4Writer读取的是OMXCodec里的数据,因为数据先到CameraSource,codec负责编码之后,MPEG4Writer才负责写到文件当中!关于数据在CameraSource/OMXCodec/MPEG4Writer之间是怎么传递的,可以参见http://guoh.org/lifelog/2013/06/interaction-between-stagefright-and-codec/当中讲Buffer的传输过程。

回头再来看,Stagefright做了什么事情?我更觉得它只是一个粘合剂(glue)的用处,它工作在MediaPlayerService这一层,把MediaSource,MediaWriter,Codec以及上层的MediaRecorder绑定在一起,这应该就是它最大的作用,Google用它来替换Opencore也是符合其一贯的工程派作风(相比复杂的学术派而言,虽然Google很多东西也很复杂,但是它一般都是以尽量简单的方式来解决问题)。

让大家觉得有点不习惯的是,它把MediaRecorder放在MediaPlayerService当中,这两个看起来是对立的事情,或者某一天它们会改名字,或者是两者分开,不知道~~

当然这只是个简单的大体介绍,Codec相关的后面争取专门来分析一下!

有些细节的东西在这里没有列出,需要的话会把一些注意点列出来:

1. 时光流逝录影

CameraSource对应的就是CameraSourceTimeLapse

具体做法就是在

dataCallbackTimestamp

当中有skipCurrentFrame

当然它是用些变量来记录和计算

mTimeBetweenTimeLapseVideoFramesUs(1E6/videoFrameRate) // 两个frame之间的间隔时间

记录上一个frame的(mLastTimeLapseFrameRealTimestampUs) // 上一个frame发生的时间

然后通过frame rate计算出两个frame之间的相距离时间,中间的都透过releaseOneRecordingFrame来drop掉

也就是说driver返回的东西都不变,只是在SW这层我们自己来处理掉

关于Time-lapse相关的可以参阅

https://en.wikipedia.org/wiki/Time-lapse_photography

2. 录影当中需要用到Camera的话是通过ICameraRecordingProxy,即Camera当中的RecordingProxy(这是一个BnCameraRecordingProxy)

当透过binder,将ICameraRecordingProxy传到服务端进程之后,它就变成了Bp,如下:

case SET_CAMERA: {

ALOGV("SET_CAMERA");

CHECK_INTERFACE(IMediaRecorder, data, reply);

sp<ICamera> camera = interface_cast<ICamera>(data.readStrongBinder());

sp<ICameraRecordingProxy> proxy =

interface_cast<ICameraRecordingProxy>(data.readStrongBinder());

reply->writeInt32(setCamera(camera, proxy));

return NO_ERROR;

} break;

在CameraSource当中会这样去使用

// We get the proxy from Camera, not ICamera. We need to get the proxy // to the remote Camera owned by the application. Here mCamera is a // local Camera object created by us. We cannot use the proxy from // mCamera here. mCamera = Camera::create(camera); if (mCamera == 0) return -EBUSY; mCameraRecordingProxy = proxy; mCameraFlags |= FLAGS_HOT_CAMERA;

疑问点:

CameraSource当中这个

List

有什么用?

每编码完一个frame,CameraSource就会将其保存起来,Buffer被release的时候,会反过来release掉这些frame(s),这种做法是为了效率么?为什么不编码完一个frame就将其release掉?

另外不得不再感叹下Google经常的delete this;行为,精妙,但是看起来反常!

博主你好,无意中搜到你关于MediaRecorder的分析,想问几个问题,望解答下。

最近自己在使用MediaRecorder做Android端的h264的硬编码,setOutputFile是LocalSocket的FileDescriptor,再通过LocalSocket接收,接收到后发送给另一方进行解码,这一条路我是走通。但是发现的问题是不同安卓机型从LocalSocket读取数据的时间差异很大,整体视频的传输的延迟差的可以达到500多ms,快的100ms之内。

所以想问下博主,MediaRecorder进行硬编码是否性能上稳定可靠,因为这个东西是要用到产品上的,自己也是刚刚接触编解码这块。如果不行,可否推荐一个android端的h264编码的方案建议,我正考虑采用ffmpeg里的x264,不知效果可行?

谢谢了~

你是针对很多机器做ROM适配?

不过这个东西也都不好讲,你要搞清楚你慢在哪里?看你上面是说socket慢?后面又要编码方案。。。

博主,你好,鄙人现在也接到一个调研android平台h264硬件编码的工作任务,前期已经调研了2个多星期了,目前找到2个解决方案,一个是用android自带的OMXCodec来实现,另外一个是用gstreamer框架的gst-omx插件来实现,我们的目标是要实现android全平台的硬件编码支持,也就是从2.2(至少)~4.0(或以上)用这一套方案都能解决,之前了解到android平台4.0以前是用的MediaRecoder来实现,而4.0以后就用的是MediaCodec,好像这两个实现下面都是基于OMXCodec来实现的。而现在我是在研究gst-omx这个插件,但不清楚怎么用它,只知道是用gst-launch来调这个插件,而我们想自己提供编码的数据源,怎么把这个数据源提供给gst-omx现在也不清楚。请问关于这两种方案或者说android平台的硬件h264编码,有什么好的建议吗?

樓主你好,請問你有測試過在Pandaboard上面搭配Webcam運行過MediaRecorder嗎??

因為目前我在嘗試這個方法,我使用一般照相機是可以運行的

可是想要嘗試錄影功能時,MediaRecorder.start()總是當掉

But在我的手機上 又是可以運行錄影功能的

請問有甚麼好的建議??

Sorry… 没有试过在Pandaboard上跑,不过建议你看看它的callstack或者core dump,或者去Google Groups Pandaboard上去问问